An AI founder shared this with me last week, and there wasn’t an ounce of hypocrisy in it. She doesn’t use AI to write her personal and thought leadership content.

She does use it to personalize outreach emails to potential buyers, however, and she does this without hesitation — because this step follows a very manual process of identifying, qualifying and uncovering the needs of potential buyers based on their profile attributes.

Let’s compare that with another conversation I had over Slack. This time, it came from the founder of a leadership coaching business primarily focused on tech startups. She shared with me that she had been paying for a Substack subscription, and the author had “obviously” started using AI to write his posts.

She was upset.

But not as upset as she was that she was also receiving AI-generated feedback from a professor at a well-known university after paying top dollar for a continuing education class.

Both of these conversations point to a bigger conversation happening in the content space: When is it okay to use AI in content, and when is it not?

I believe, though, there’s a nuance that most people aren’t picking up on in that conversation:

Who deserves human-written content, and who deserves AI-generated content?

To that end, this essay is an argument for content equity and discernment. Let’s dig in …

The issue of audience

And I don’t think it’s because they use AI.

I think it’s because they don’t use AI for that writing. (Or if they do, they use it in a limited way that doesn’t taint the unique voice and perspective their followers have come to expect.)

However, many businesses — the businesses these same founders and leaders run — are using AI to generate content for marketing, communication and sales, ad nauseum.

What’s the difference? Founders and leaders write content for their followers. Their businesses write content for their customers.

So the difference appears to be the definition of the audience: Followers of a personal brand vs. customers of a business.

Following that logic, the implication is that followers deserve human-written content, but customers don’t.

Why is that?

If this is resonating as true for you, have you ever stopped to think about why this is happening? Customers are human beings, too, with needs and desires, problems and questions, situations and contexts. In industries like tech, customers may be more complex — the buying process may involve several layers of decision-makers — but everyone involved is still very much human.

How did businesses become so disconnected from the humans on the other side of the buying equation? I’m not sure.

But I’m sure of one thing: When you treat customers like the humans they are, you create a better experience for them, and you’re more likely to build a relationship that stands the test of time. Given lengthening buying cycles and increasing competition, that matters more than ever.

So here’s my first challenge for you to consider:

If you don’t use AI to write content for your personal audience, maybe you should question why it’s okay for your customers to receive AI-generated content.

The issue of experience

You might argue with me that a “good content experience” is subjective. And you wouldn’t be wrong. But let’s look under the hood.

Do you agree with the woman from the intro who was upset about paying for a Substack subscription, only to be sent AI-generated content?

I think most of us would be upset about that, honestly.

But that means we know that an AI-written post is not as valuable as a human-written post.

So if the AI-written content isn’t as valuable, why do businesses produce it at all?

Let’s consider why anyone uses AI to generate content: speed and quantity. You can produce content faster and in a greater volume with AI. Humans take time to research, think, plan, write, edit — AI can do that in an instant.

Value isn’t part of the content equation. Speed and quantity are the deciding factors.

But this is causing another problem …

Twin waves: The tsunami of crappy content + the increase in touchpoints before sales contact

But much of the tsunami of content was written for algorithms, not humans.

It’s getting harder and more time-consuming for audiences to sift through search results, social feeds, and their inboxes to find content of value.

And this creates complication for tech companies especially, because most tech buyers heavily rely on content in their research and decision-making processes. (More on this in the next section.)

In 2015, it took an average of 6-8 marketing touches to generate a viable sales lead. In 2024, it takes 71.

If we now have mass quantities of algorithmic and AI-generated content that doesn’t really serve the humans who rely on it, but buyers rely on it exponentially more, and leaders are ok with that for their customers (but not their own followers) …

There’s a big disconnect.

And that disconnect has led to …

The issue of accurate, useful information

Trust is hard-won and easily lost. That’s truer in business than anywhere.

I’ve been seeing the rise of the replicant effect since the end of 2022: When customers know that even some of a company’s content is AI generated, they doubt the veracity of all of the content.

If a customer can’t trust a company’s content, they can’t trust the company. And that impacts the immediate and long-term efficacy of both marketing and sales.

The problem is compounded, however, with the rise in use of AI for product research.

What that all adds up to for businesses is that search results aren’t answering customer questions accurately, thoroughly … or often at all.

To solve this problem, customers are turning to AI.

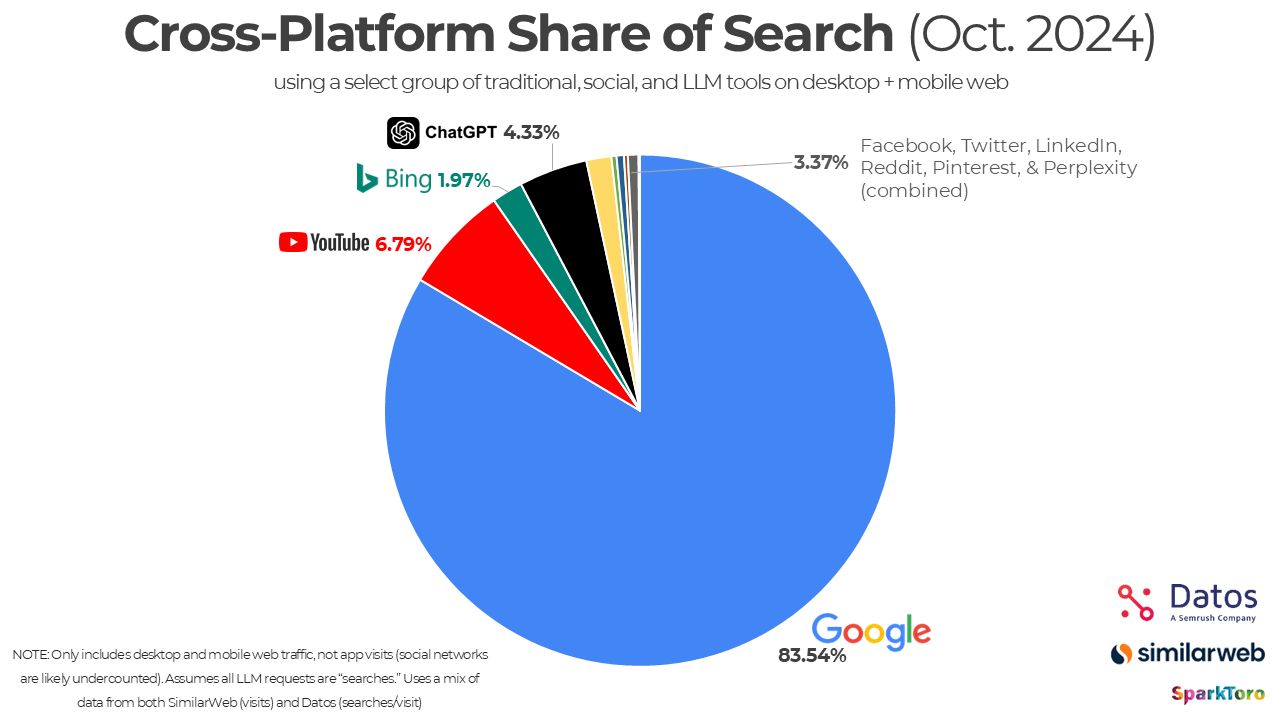

The percentage of search happening on ChatGPT alone is interesting to consider:

Source: Rand Fishkin on LinkedIn

Once again, though, this trend creates more problems for companies.

When your audience is using AI to get answers to their questions, they’re not getting accurate information.

And I’m not just talking about hallucination — though that is an issue. I’m talking about the fact that generative AI can’t infer intent.

Here’s an example …

Recently I was conducting research into what AI companies are doing with their marketing. I was curious about this topic because I’m noticing how differently AI-first companies are approaching their marketing compared to how SaaS companies have done it.

Not finding what I was looking for in search results, I began going to specific, high-integrity websites I knew would likely have answers. I found some good stuff there, but ever the researcher, I needed more. And the manual effort of going to individual websites was beginning to add up. So, wanting to speed up the process, and out of sheer curiosity, I pulled up Perplexity to see if it might point me in a new direction. (I have a hard rule about never using AI to write for me — but I do use AI in my process sometimes!)

The results looked legit upon first glance …

but I know enough to look beyond the pretty package. And I know where to look. I clicked the three dots at the bottom of the results, and hit “View Sources” …

Every single source was about how to use AI in your content marketing, not how AI companies are using content marketing. Every. single. source.

But the results it gave me were completely useless to me in completing my task.

Here’s what scares me about this: I knew to check the sources. Most people don’t.

Most people would have taken the Perplexity output and either published it as their own (don’t get me started on why THAT’S a problem …) or used it as research.

Now let’s get back to how your customers are encountering this situation.

People are abandoning search for AI tools that seem to answer their questions because search has gotten so bad — but they’re not questioning where the answers came from.

And then they’re making decisions based on those probably incorrect answers.

All because they weren’t finding what they needed in search. They weren’t finding what they needed in nonsense, mass produced, keyword-centered SEO articles.

All of that leads me back to the main question:

Why are businesses continuing to produce that nonsense, en masse — and why are leaders okay with that, as long as it’s customer-facing?

The only answer I can come up with is this:

Most leaders and their marketing teams aren’t thinking about it because customers aren’t directly telling them it’s a problem.

Customers are just solving the issue themselves by seeking alternate sources of information.

So is it a problem, then, if customers aren’t screaming about it?

I believe it is. And here’s why:

When you get content right — when it’s useful, helpful, valuable, and solves a real problem — customers notice. And they speak up.

This was a fintech company. Fintech is not known for their humanity — but this one took a human approach to their content, and their human customers spoke up and signed up.

- See marketing and sales content as relationship-building assets, important sources of information, and 24/7 representatives of the company …

- Still believe that high-value content matters in business …

- Believe that customers are the most important thing …

Consider how your content is getting created

This is what I believe in:

- Content equity. Everyone deserves good, valuable, soulful content. Followers, leads, prospects, customers, employees — everyone.

- Discernment. Using AI isn’t bad — as long as it’s used with discernment.

Founders: Keep up the thoughtful content you’re creating. It’s valuable, and it’s so needed. But if your company is generating gobs of customer-facing content with AI, think about why that is, and why you’re okay with it.

Startups: Treating content production as a numbers game means you’re getting lumped in with everyone else. Swimming in the sea of sameness means your customers can’t tell you apart from the competition.

This goes beyond differentiation.

Your solution might be groundbreaking. Your founder might be the next Fortune cover story. But if your content doesn’t stand apart … your company doesn’t stand out.

Human-driven and human-written content (even with an AI assist to make it better) stands out because it serves.

Come talk to me about how your content is getting done. Let’s find opportunities to add humanity to your writing process so the customers you’re trying to reach will sit up and take notice.